Gateway API: Can I replace my Ingress Controller with Cilium?

Overview

When deploying an application on Kubernetes, the next step usually involves making it accessible to users. We commonly use Ingress controllers, such as Nginx, Haproxy, Traefik, or those from Cloud providers, to direct incoming traffic to the application, manage load balancing, TLS termination, and more.

Then we have to choose from the plethora of available options 🤯. Cilium is, relatively recently, one of them and aims to handle all these networking aspects.

Cilium is an Open-Source networking and security solution based on eBPF whose adoption is growing rapidly. It's probably the network plugin that provides the most features. We won't cover all of them, but one such feature involves managing incoming traffic using the Gateway API (GAPI).

🎯 Our target

- Understand exactly what the Gateway API is and how it represents an evolution from the

IngressAPI. - Demonstrations of real-world scenarios deployed the GitOps way.

- Current limitations and upcoming developments.

All the steps carried out in this article come from this git repository.

I encourage you to explore it, as it goes far beyond the context of this article:

- Installation of an EKS cluster with

Ciliumconfigured with the kube-proxy replacement enbled and a dedicated Daemonset forEnvoy. - Proposal of a

Fluxstructure with dependency management and a DRY code I think is efficient. Crossplaneand IRSA composition which simplifies the management of IAM permissions for platform components.- Automated domain names and certificates management with

External-DNSandLet's Encrypt.

The idea being to have everything set up in just a few minutes, with a single command line 🤩.

☸ Introduction to Gateway API

As mentioned previously, there are many Ingress Controllers options, and each has its own specificities and particular features, sometimes making their use complex. Furthermore, the traditionnal Ingress API in Kubernetes has very limited parameters. Some solutions have even created their own CRDs (Kubernetes Custom Resources) while others use annotations to overcome these limitations.

Here comes the Gateway API! This is actually a standard that allows declaring advanced networking features without requiring specific extensions to the underlying controller. Moreover, since all controllers use the same API, it is possible to switch from one solution to another without changing the configuration (The Kubenetes manifests which describe how the incoming traffic should be routed).

Among the concepts that we will explore, GAPI brings a granular authorization model which defines explicit roles with distinct permissions. (More information on the GAPI security model here).

This is worth noting that this project is driven by the sig-network-kubernetes working group, and there's a slack channel where you can reach out to them if needed.

Let's see how GAPI is used in practice with Cilium 🚀!

☑️ Prerequisites

For the remainder of this article, we assume an EKS cluster has been deployed. If you're not using the method suggested in the demo repo as the basis for this article, there are a few points to check for GAPI to be usable.

ℹ️ The installation method described here is based on Helm, all the values can be viewed here.

Install the

CRDsavailable in the Gateway API repository.NoteIf Cilium is set up with

GAPIsupport (see below) and the CRDs are missing, it won't start. In the demo repo, the GAPI CRDs are installed once during the cluster creation so that Cilium can start, and then they are managed by Flux.Replace

kube-proxywith the network forwarding features provided by Cilium andeBPF.

1kubeProxyReplacement: true

- Enable Gateway API support.

1gatewayAPI:

2 enabled: true

Check the installation For that you need to install the command line tool

cilium. I personnaly use asdf:1asdf plugin-add cilium-cli 2asdf install cilium-cli 0.15.7 3asdf global cilium 0.15.7The following command allows to ensure that all the components are up and running:

1cilium status --wait 2 /¯¯\ 3/¯¯\__/¯¯\ Cilium: OK 4\__/¯¯\__/ Operator: OK 5/¯¯\__/¯¯\ Envoy DaemonSet: OK 6\__/¯¯\__/ Hubble Relay: disabled 7 \__/ ClusterMesh: disabled 8 9Deployment cilium-operator Desired: 2, Ready: 2/2, Available: 2/2 10DaemonSet cilium Desired: 2, Ready: 2/2, Available: 2/2 11DaemonSet cilium-envoy Desired: 2, Ready: 2/2, Available: 2/2 12Containers: cilium Running: 2 13 cilium-operator Running: 2 14 cilium-envoy Running: 2 15Cluster Pods: 33/33 managed by Cilium 16Helm chart version: 1.14.2 17Image versions cilium quay.io/cilium/cilium:v1.14.2@sha256:6263f3a3d5d63b267b538298dbeb5ae87da3efacf09a2c620446c873ba807d35: 2 18 cilium-operator quay.io/cilium/operator-aws:v1.14.2@sha256:8d514a9eaa06b7a704d1ccead8c7e663334975e6584a815efe2b8c15244493f1: 2 19 cilium-envoy quay.io/cilium/cilium-envoy:v1.25.9-e198a2824d309024cb91fb6a984445e73033291d@sha256:52541e1726041b050c5d475b3c527ca4b8da487a0bbb0309f72247e8127af0ec: 2Finally you can check that the Gateway API support is enabled by running

1cilium config view | grep -w "enable-gateway-api" 2enable-gateway-api true 3enable-gateway-api-secrets-sync trueYou could also run end to end tests as follows

1cilium connectivity test⚠️ However this command (

connectivity test) currently throws errors with Envoy as a DaemonSet enabled. (Github Issue).Infoas DaemonSet

By default, the Cilium agent also runs

Envoywithin the same pod and delegates to it level 7 network operations. Since the versionv1.14, it is possible to deploy Envoy separately, which brings several benefits:- If one modifies/restarts a component (whether it's Cilium or Envoy), it doesn't affect the other.

- Better allocate resources to each component to optimize performance.

- Limits the attack surface in case of compromise of one of the pods.

- Envoy logs and Cilium agent logs are not mixed.

You can use the following command to check that this feature is indeed active:

1cilium status 2 /¯¯\ 3 /¯¯\__/¯¯\ Cilium: OK 4 \__/¯¯\__/ Operator: OK 5 /¯¯\__/¯¯\ Envoy DaemonSet: OK 6 \__/¯¯\__/ Hubble Relay: disabled 7 \__/ ClusterMesh: disabled

🚪 The Entry Point: GatewayClass and Gateway

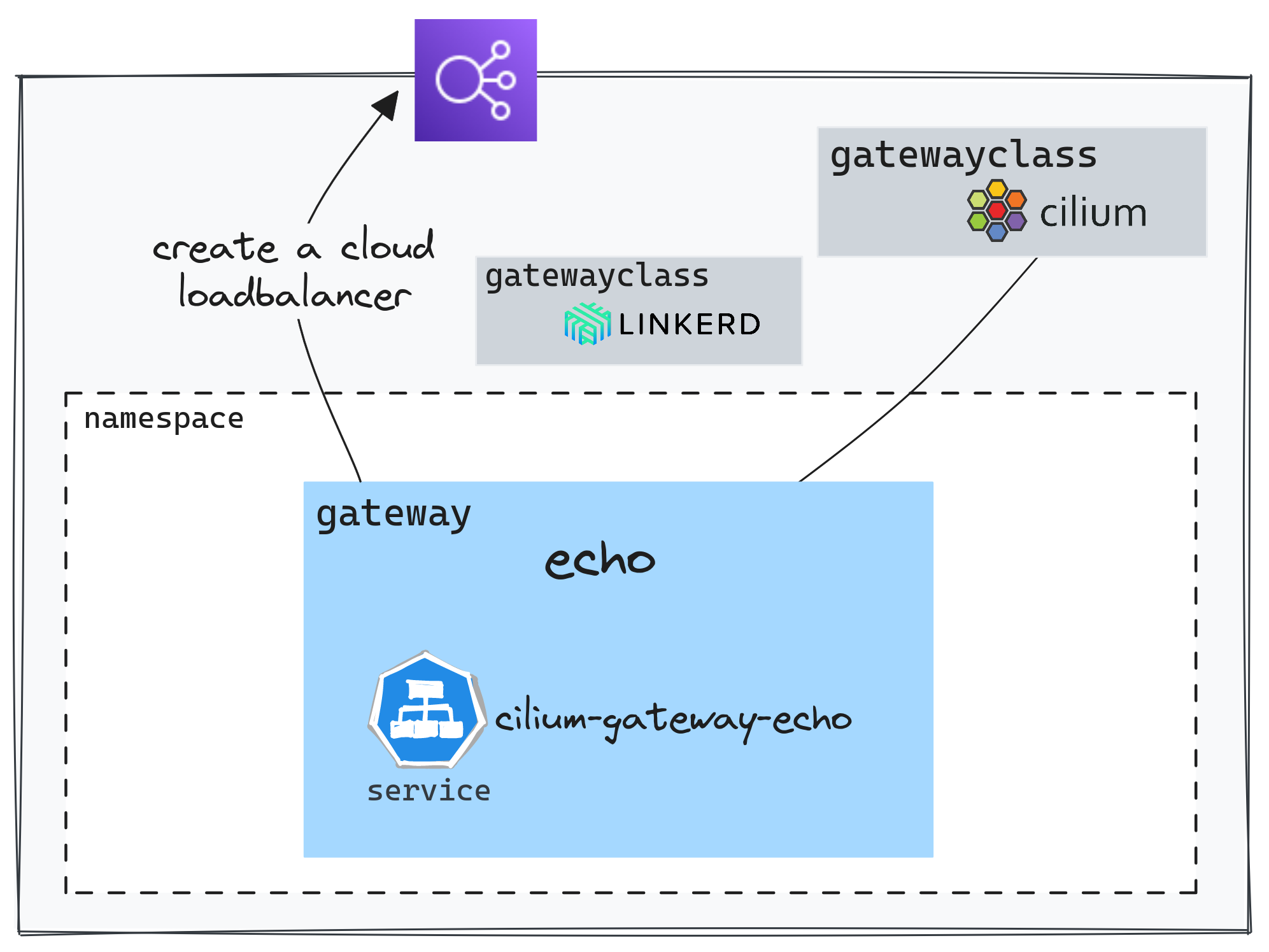

Once the conditions are met, we have access to several elements. We can make use of the custom resources defined by the Gateway API CRDs. Moreover, right after installing Cilium, a GatewayClass is immediately available.

1kubectl get gatewayclasses.gateway.networking.k8s.io

2NAME CONTROLLER ACCEPTED AGE

3cilium io.cilium/gateway-controller True 7m59s

On a Kubernetes cluster, you could configure multiple GatewayClasses, thus having the ability to use different implementations. For instance, we can use Linkerd by referencing the GatewayClass in the Gateway configuration.

The Gateway is the resource that allows triggering the creation of load balancing components in the Cloud provider.

Here's a simple example: apps/base/echo/gateway.yaml

1apiVersion: gateway.networking.k8s.io/v1beta1

2kind: Gateway

3metadata:

4 name: echo-gateway

5 namespace: echo

6spec:

7 gatewayClassName: cilium

8 listeners:

9 - protocol: HTTP

10 port: 80

11 name: echo-1-echo-server

12 allowedRoutes:

13 namespaces:

14 from: Same

On AWS (EKS), when configuring a Gateway, Cilium creates a Service of type LoadBalancer. Then another controller (The AWS Load Balancer Controller) handles the creation of the Cloud load balancer (NLB)

1kubectl get svc -n echo cilium-gateway-echo

2NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

3cilium-gateway-echo LoadBalancer 172.20.19.82 k8s-echo-ciliumga-64708ec85c-fcb7661f1ae4e4a4.elb.eu-west-3.amazonaws.com 80:30395/TCP 2m58s

This is worth noting that the load balancer address is also linked to the Gateway.

1kubectl get gateway -n echo echo

2NAME CLASS ADDRESS PROGRAMMED AGE

3echo cilium k8s-echo-ciliumga-64708ec85c-fcb7661f1ae4e4a4.elb.eu-west-3.amazonaws.com True 16m

↪️ Routing rules: HTTPRoute

A basic rule

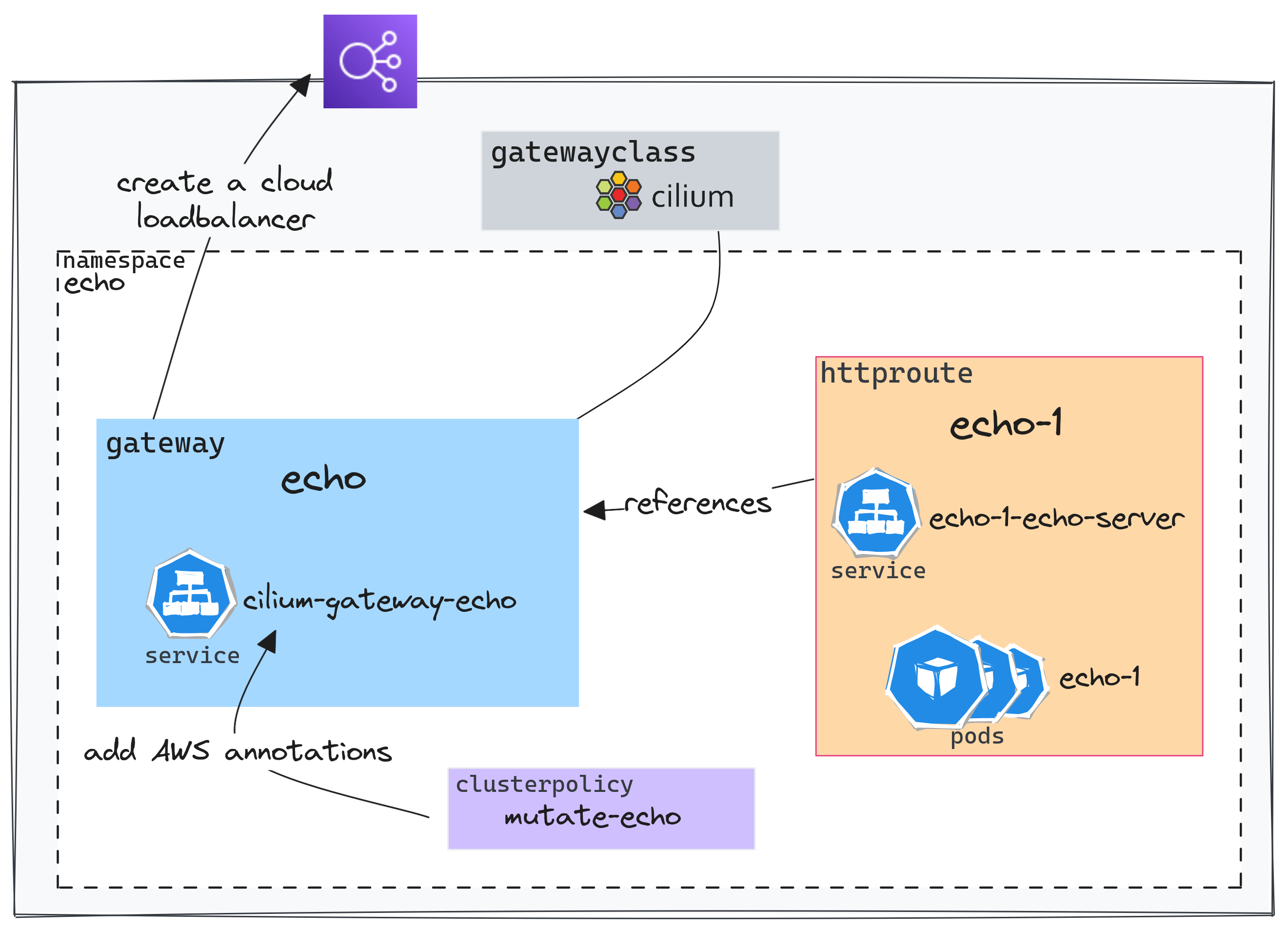

To summarize the above diagram in a few words:An HTTPRoute allows configuring the routing to the service by referencing the Gateway and defining the desired routing parameters.

workaround

workaround

As of now, it is not possible to configure the annotations of services generated by the Gateways (Github Issue). A workaround has been proposed to modify the service generated by the Gateway as soon as it is created.

Kyverno is a tool that ensures configuration compliance with best practices and security requirements. We are using it here solely for its ability to easily describe a mutation rule.

security/mycluster-0/echo-gw-clusterpolicy.yaml

1spec:

2 rules:

3 - name: mutate-svc-annotations

4 match:

5 any:

6 - resources:

7 kinds:

8 - Service

9 namespaces:

10 - echo

11 name: cilium-gateway-echo

12 mutate:

13 patchStrategicMerge:

14 metadata:

15 annotations:

16 external-dns.alpha.kubernetes.io/hostname: echo.${domain_name}

17 service.beta.kubernetes.io/aws-load-balancer-scheme: "internet-facing"

18 service.beta.kubernetes.io/aws-load-balancer-backend-protocol: tcp

19 spec:

20 loadBalancerClass: service.k8s.aws/nlb

The service cilium-gateway-echo will therefore have the AWS controller's annotations added, as well as an annotation allowing for automatic DNS record configuration.

1apiVersion: gateway.networking.k8s.io/v1beta1

2kind: HTTPRoute

3metadata:

4 name: echo-1

5 namespace: echo

6spec:

7 parentRefs:

8 - name: echo

9 namespace: echo

10 rules:

11 - matches:

12 - path:

13 type: PathPrefix

14 value: /

15 backendRefs:

16 - name: echo-1-echo-server

17 port: 80

The example used above is very simple: all requests are forwarded to the echo-1-echo-server service.parentRefs indicates which Gateway to use and then the routing rules are defined under the rules section.

The routing rules could also be based on the path.

1...

2spec:

3 hostnames:

4 - foo.bar.com

5 rules:

6 - matches:

7 - path:

8 type: PathPrefix

9 value: /login

Or based on an HTTP Header

1...

2spec:

3 rules:

4 - matches:

5 headers:

6 - name: "version"

7 value: "2"

8...

Let's check if the service is reachable.:

1curl -s http://echo.cloud.ogenki.io | jq -rc '.environment.HOSTNAME'

2echo-1-echo-server-fd88497d-w6sgn

As you can see, the service is exposed in HTTP without a certificate. Let's try to fix that 😉

Configure a TLS certificate

There are several methods to configure TLS with GAPI. Here, we will use the most common case: HTTPS protocol and TLS termination at the Gateway.

Let's assume we want to configure the domain name echo.cloud.ogenki.io used earlier. The configuration is mainly done by configuring the Gateway.

apps/base/echo/tls-gateway.yaml

1apiVersion: gateway.networking.k8s.io/v1beta1

2kind: Gateway

3metadata:

4 name: echo

5 namespace: echo

6 annotations:

7 cert-manager.io/cluster-issuer: letsencrypt-prod

8spec:

9 gatewayClassName: cilium

10 listeners:

11 - name: http

12 hostname: "echo.${domain_name}"

13 port: 443

14 protocol: HTTPS

15 allowedRoutes:

16 namespaces:

17 from: Same

18 tls:

19 mode: Terminate

20 certificateRefs:

21 - name: echo-tls

The essential point here is the reference to a secret containing the certificate named echo-tls. This certificate can be created manually, but for this article, I chose to automate this with Let's Encrypt and cert-manager.

![]() cert-manager

cert-manager

With cert-manager, it's pretty straightforward to automate the creation and update of certificates exposed by the Gateway. For this, you need to allow the controller to access route53 in order to solve a DNS01 challenge (A mechanism that ensures that clients can only request certificates for domains they own).

A ClusterIssuer resource describes the required configuration to generate certificates with cert-manager.

Next, we just need to add an annotation cert-manager.io/cluster-issuer and set the Kubernetes secret where the certificate will be stored.

ℹ️ In the demo repo, permissions are assigned using Crossplane, which takes care of configuring these IAM perms in AWS.

For routing to work correctly, you also need to attach the HTTPRoute to the right Gateway and specify the domain name.

1apiVersion: gateway.networking.k8s.io/v1beta1

2kind: HTTPRoute

3metadata:

4 name: echo-1

5 namespace: echo

6spec:

7 parentRefs:

8 - name: echo

9 namespace: echo

10 hostnames:

11 - "echo.${domain_name}"

12...

After a few seconds the certificate will be created.

1kubectl get cert -n echo

2NAME READY SECRET AGE

3echo-tls True echo-tls 43m

Finally, we can check that the certificate indeed comes from Let's Encrypt as follows:

1curl https://echo.cloud.ogenki.io -v 2>&1 | grep -A 6 'Server certificate'

2* Server certificate:

3* subject: CN=echo.cloud.ogenki.io

4* start date: Sep 15 14:43:00 2023 GMT

5* expire date: Dec 14 14:42:59 2023 GMT

6* subjectAltName: host "echo.cloud.ogenki.io" matched cert's "echo.cloud.ogenki.io"

7* issuer: C=US; O=Let's Encrypt; CN=R3

8* SSL certificate verify ok.

GAPI also allows you to configure end-to-end TLS, all the way to the container.

This is done by configuring the Gateway in Passthrough mode and using a TLSRoute resource.

The certificate must also be carried by the pod that performs the TLS termination.

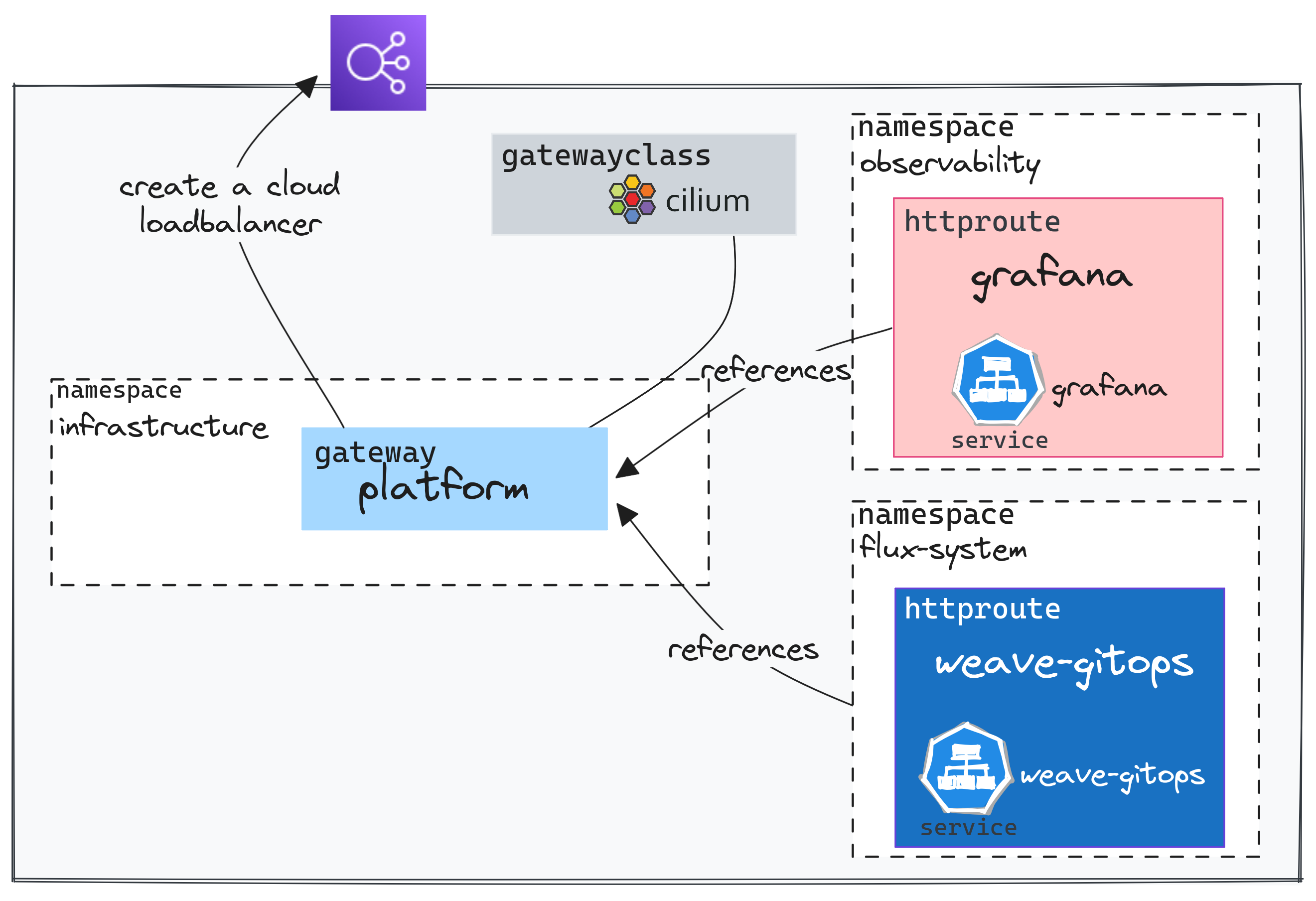

Sharing a Gateway accross multiple namespaces

With GAPI, you can route traffic across Namespaces. This is made possible thanks to distinct resources for each function: A Gateway that allows configuring the infrastructure, and the *Routes. These routes can be attached to a Gateway located in another namespace. It is thus possible for different teams/projects to share the same infrastructure components.

However, this requires to specify which route is allowed to reference the Gateway. Here we assume that we have a Gateway dedicated to internal tools called platform.

By using the allowedRoutes parameter, we explicitly specify which namespaces are allowed to be attached to this Gateway.

infrastructure/base/gapi/platform-gateway.yaml

1...

2 allowedRoutes:

3 namespaces:

4 from: Selector

5 selector:

6 matchExpressions:

7 - key: kubernetes.io/metadata.name

8 operator: In

9 values:

10 - observability

11 - flux-system

12 tls:

13 mode: Terminate

14 certificateRefs:

15 - name: platform-tls

The HTTPRoutes configured in the namespaces observability and flux-system are attached to this unique Gateway.

1...

2spec:

3 parentRefs:

4 - name: platform

5 namespace: infrastructure

And therefore, use the same load balancer from the Cloud provider.

1NLB_DOMAIN=$(kubectl get svc -n infrastructure cilium-gateway-platform -o jsonpath={.status.loadBalancer.ingress[0].hostname})

2

3dig +short ${NLB_DOMAIN}

413.36.89.108

5

6dig +short grafana-mycluster-0.cloud.ogenki.io

713.36.89.108

8

9dig +short gitops-mycluster-0.cloud.ogenki.io

1013.36.89.108

🔒 These internal tools shouldn't be exposed on the Internet, but you know: this is just a demo 🙏. For instance, we could use an internal Gateway (private IP) by playing with the annotations and make use of a private connection system (VPN, tunnels...).

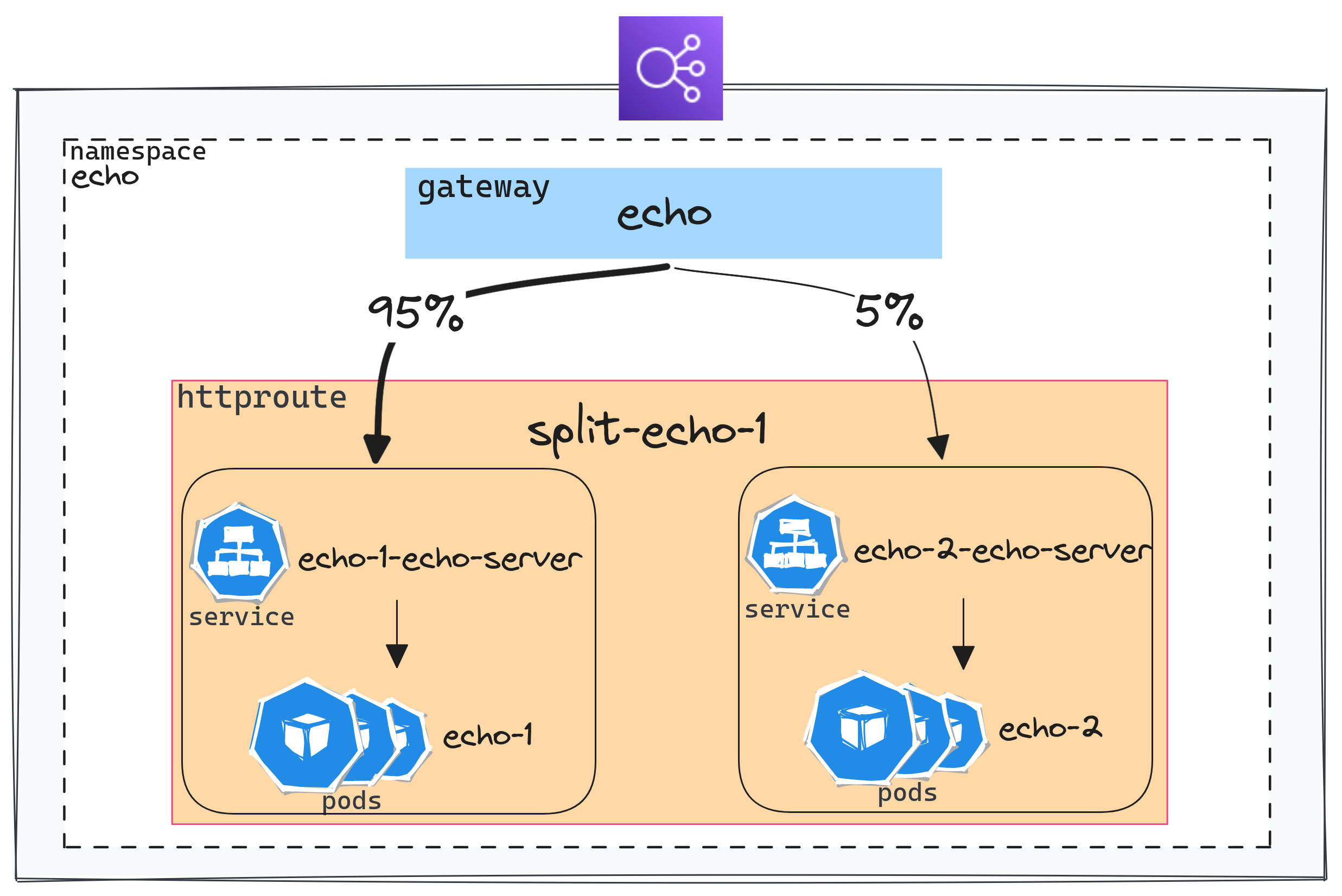

Traffic splitting

One feature that is commonly brought by Service Meshes is the ability to test an application on a portion of the traffic when a new version is available (A/B testing or Canary deployment).

GAPI makes this quite simple by using weights.

Here's an example that forwards 5% of the traffic to the service echo-2-echo-server:

apps/base/echo/httproute-split.yaml

1...

2 hostnames:

3 - "split-echo.${domain_name}"

4 rules:

5 - matches:

6 - path:

7 type: PathPrefix

8 value: /

9 backendRefs:

10 - name: echo-1-echo-server

11 port: 80

12 weight: 95

13 - name: echo-2-echo-server

14 port: 80

15 weight: 5

Let's check that the distribution happens as expected:

1./scripts/check-split.sh https://split-echo.cloud.ogenki.io

2Number of requests for echo-1: 95

3Number of requests for echo-2: 5

Headers modifications

It is also possible to change HTTP Headers: to add, modify, or delete them. These modifications can be applied to either request or response headers through the use of filters in the HTTPRoute manifest.

For instance, we will add a Header to the request.

1apiVersion: gateway.networking.k8s.io/v1beta1

2kind: HTTPRoute

3metadata:

4 name: echo-1

5 namespace: echo

6spec:

7...

8 rules:

9 - matches:

10 - path:

11 type: PathPrefix

12 value: /req-header-add

13 filters:

14 - type: RequestHeaderModifier

15 requestHeaderModifier:

16 add:

17 - name: foo

18 value: bar

19 backendRefs:

20 - name: echo-1-echo-server

21 port: 80

22...

This command allows to that the header is indeed added:

1curl -s https://echo.cloud.ogenki.io/req-header-add | jq '.request.headers'

2{

3 "host": "echo.cloud.ogenki.io",

4 "user-agent": "curl/8.2.1",

5 "accept": "*/*",

6 "x-forwarded-for": "81.220.234.254",

7 "x-forwarded-proto": "https",

8 "x-envoy-external-address": "81.220.234.254",

9 "x-request-id": "320ba4d2-3bd6-4c2f-8a97-74296a9f3f26",

10 "foo": "bar"

11}

🔒 Assign the proper permissions

GAPI offers a clear permission-sharing model between the traffic routing infrastructure (managed by cluster administrators) and the applications (managed by developers).

The availability of multiple custom resources allows to use Kubernete's RBAC configuration to assign permissions in a declarative way. I've added a few examples which have no effect in my demo cluster but might give you an idea.

The configuration below grants members of the developers group the ability to manage HTTPRoutes within the echo namespace, while only providing them read access to the Gateways.

1---

2apiVersion: rbac.authorization.k8s.io/v1

3kind: Role

4metadata:

5 namespace: echo

6 name: gapi-developer

7rules:

8 - apiGroups: ["gateway.networking.k8s.io"]

9 resources: ["httproutes"]

10 verbs: ["*"]

11 - apiGroups: ["gateway.networking.k8s.io"]

12 resources: ["gateways"]

13 verbs: ["get", "list"]

14---

15apiVersion: rbac.authorization.k8s.io/v1

16kind: RoleBinding

17metadata:

18 name: gapi-developer

19 namespace: echo

20subjects:

21 - kind: Group

22 name: "developers"

23 apiGroup: rbac.authorization.k8s.io

24roleRef:

25 kind: Role

26 name: gapi-developer

27 apiGroup: rbac.authorization.k8s.io

🤔 A somewhat unclear scope at first glance

One could be confused with what's commonly referred to as an API Gateway. A section of the FAQ has been created to clarify its difference with the Gateway API. Although GAPI offers features typically found in an API Gateway, it primarily serves as a specific implementation for Kubernetes. However, the choice of this name can indeed cause confusion.

Moreover please note that this article focuses solely on inbound traffic, termed north-south, traditionally managed by Ingress Controllers. This traffic is actually GAPI's initial scope. A recent initiative named GAMMA aims to also handle east-west routing, which will standardize certain features commonly provided by Service Meshes solutions in the future. (See this article for more details).

💭 Final thoughts

To be honest, I've known about the Gateway API for some time. Although I've read a few articles, I hadn't truly dived deep. I'd think, "Why bother? My Ingress Controller works, and there's a learning curve with this."

GAPI is on the rise and nearing its GA release. Several projects have embraced it, and this API for managing traffic within Kubernetes will quickly become the standard.

I must say, configuring GAPI felt intuitive and explicit ❤️. Its security model strikes a balance, empowering developers without compromising security. And the seamless infrastructure management? You can switch between implementations without touching the *Routes.

Would I swap my Ingress Controller for Cilium today? Not yet, but it's on the horizon.

It's worth highlighting Cilium's broad range of capabilities: With Kubernetes surrounded by a plethora of tools, Cilium stands out, promising features like metrics, tracing, service-mesh, security, and, yes, Ingress Controller with GAPI.

However, there are a few challenges to note:

- TCP and UDP support

- GRPC support

- The need to use a mutation rule to configure cloud components (Github Issue).

- Many of the features discussed in this blog are still in the experimental stage. For instance, the extended functions, which have been supported since the most recent release at the time of my writing (

v1.14.2). I attempted to set up a straightforward HTTP>HTTPS redirect but ran into this issue. Consequently, I expect some modifications to the API in the near future.

While I've only scratched the surface of what Cilium's GAPI can offer (honestly, this post is already quite long 😜), I am hopeful that we can consider its use in production soon. But considering the points mentioned earlier, I would advise waiting a bit longer. That said if you want to prepare the future, now's the time 😉!

🔖 References

- https://gateway-api.sigs.k8s.io/

- https://docs.cilium.io/en/latest/network/servicemesh/gateway-api/gateway-api/#gs-gateway-api

- https://isovalent.com/blog/post/cilium-gateway-api/

- https://isovalent.com/blog/post/tutorial-getting-started-with-the-cilium-gateway-api/

- Isovalent's labs are great to start playing with Gateway API and you'll get new badges to add to your collection 😄