TLS with Gateway API: Efficient and Secure Management of Public and Private Certificates

Overview

TLS encryption is an essential standard in securing services and applications, whether on the internet or within an enterprise. On the internet, using a TLS certificate validated by a recognized certification authority is crucial to ensure the confidentiality of data exchanges.

For internal communications, a private PKI (Private Public Key Infrastructure) plays a critical role in distributing and validating the certificates necessary for encrypting communications within the enterprise, thus ensuring enhanced security.

In this article, we will delve into setting up an effective and robust management of TLS certificates within an enterprise. We will explore best practices, tools, and strategies for a reliable certificate infrastructure.

🎯 Our target

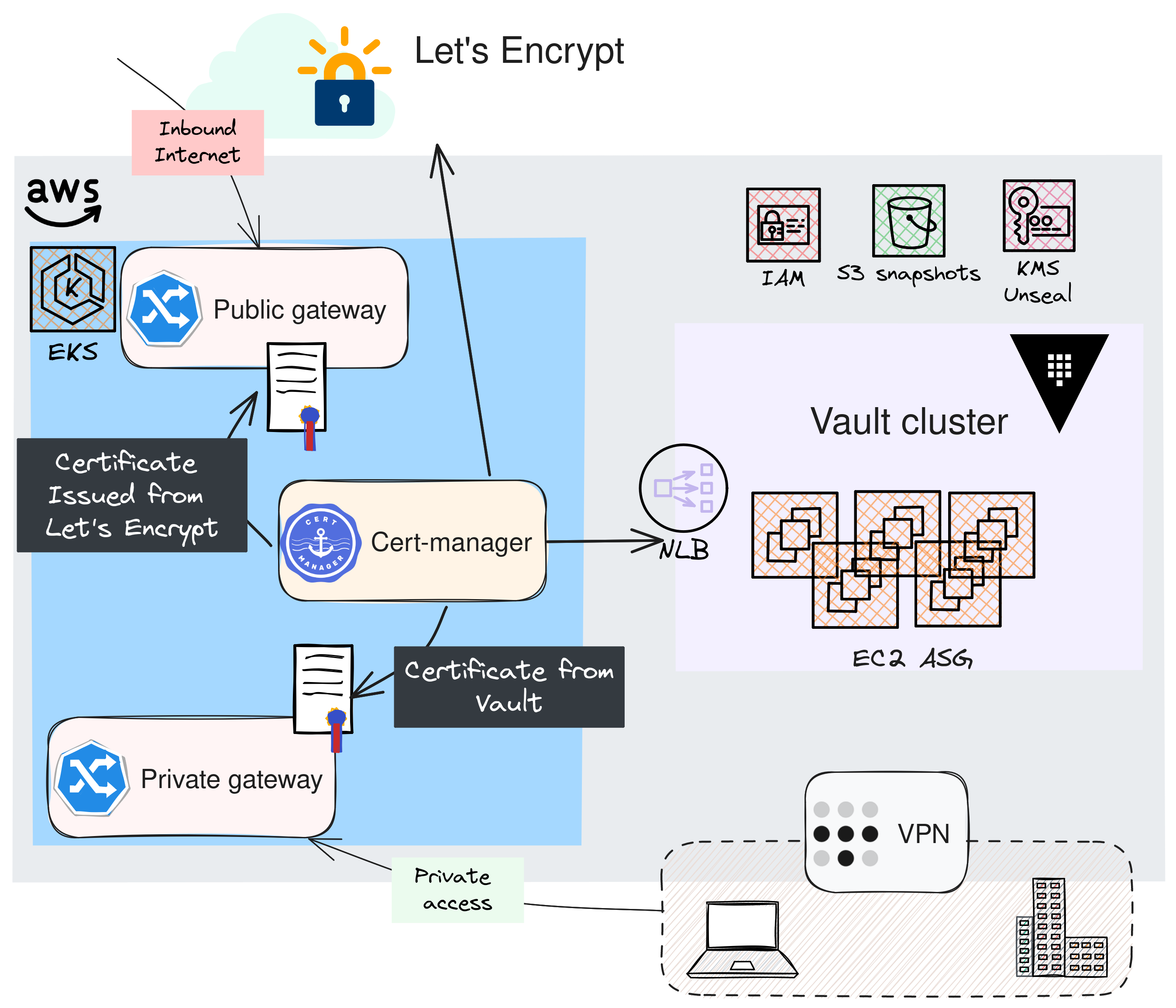

- In order to allow users to access to our applications, we will use the Gateway API standard. (You may want to have a look at my previous article on the topic.)

- In the architecture presented above, one component plays a major role: Cert-manager. Indeed, it is the central engine that will handle the generation and renewal of certificates.

- For applications intended to remain internal and not exposed on the internet, we will opt for generating certificates via a private PKI with HashiCorp's Vault.

- On the other hand, for public applications, we will use certificates issued by Let's Encrypt.

🛂 About Let's Encrypt

| Based on the ACME protocol (Automatic Certificate Management Environment), this solution enables automatic installation and renewal of certificates. Let's Encrypt is simple to implement, free, and enhances security. However, it's important to note that the certificates have a short duration, requiring frequent renewals. For more information on how it works, you can refer to this documentation. |  |

🔐 A private PKI with Vault

A private PKI, or Private Public Key Infrastructure, is a cryptographic system used within an organization to secure data and communications. It relies on an internal Certification Authority (CA) that issues organization-specific TLS certificates.

This system enables an organization to:

- Fully control the identity verification and authentication procedures, and to issue certificates for internal domains, which is not feasible with Let's Encrypt.

- Secure internal communications and data with strong authentication and encryption within the organization.

However, implementing such an infrastructure requires careful attention and the management of multiple components. Here, we'll explore one of the main features of Vault, which is initially a secret management tool but can also serve as a private PKI.

All the actions performed in this post come from this git repository

It contains the Opentofu code for deploying and configuring Vault as well as numerous resources that help me write my blog posts. Feel free to provide feedback or open issues... 🙏

✅ Requirements

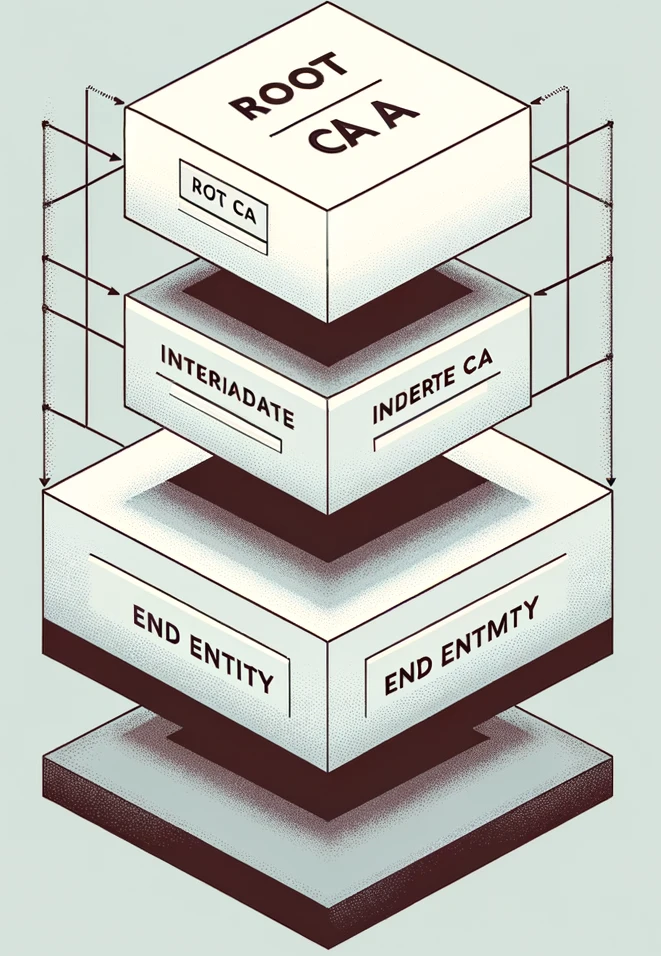

| A three-tier PKI consists of a Root Certificate Authority (CA) at the top, Intermediate CAs in the middle, and End Entities at the bottom. The Root CA issues certificates to the Intermediate CAs, and the Intermediate CAs to End Entities which in turn issue certificates to end users or devices.This structure enhances security by minimizing the Root CA's exposure and simplifies management and revocation of certificates, offering a scalable and flexible solution for digital security. |

To enhance the security of the certificate management system, it's recommended to create an offline Root Certification Authority (Root CA). Therefore, we need to complete the following steps beforehand:

Generate the Offline Root Certification Authority: This approach minimizes security risks by isolating the Root CA from the network.

Create an Intermediate Certification Authority: It operates under the Root CA's authority and is used to issue certificates, allowing for more flexible and secure management.

Generate the certificate for the Vault server from the Intermediate CA: This ensures a trust chain from the Root CA to the end-user certificates, through the Intermediate CA.

By following the procedure described here, you should obtain the following files which will be used throughout the rest of this article. This is a suggestion based on openssl, and you may use the method that best suits you to achieve the same outcome.

1cd opentofu/openbao/cluster

2

3ls .tls/*.pem

4.tls/bundle.pem .tls/ca-chain.pem .tls/intermediate-ca-key.pem .tls/intermediate-ca.pem .tls/root-ca-key.pem .tls/root-ca.pem .tls/vault-key.pem .tls/vault.pem

🏗️ Building the cluster

There are several methods to deploy a Vault cluster, but I couldn't find one that suited me, so I built my own by making the following decisions:

Integrated storage based on the Raft protocol, which is particularly suited for distributed systems and ensures high resilience. Below is a table illustrating fault tolerance depending on the cluster size:

Cluster size Failure tolerance 1 0 3 1 5 2 7 3 I chose to run a Vault cluster that consists of 5 members, allowing to tolerate the failure of 2 nodes.

Ephemeral node strategy with SPOT instances: The architecture exclusively comprises SPOT instances for optimal cost efficiency. The Autoscaling Group is configured with three distinct SPOT instance pools, each utilizing a different instance type. This strategic diversification aims to mitigate any potential failure due to a specific SPOT instance type shortage, thus ensuring high availability and a service continuity while maximizing cost efficiency.

Vault Auto-Unseal feature: This function is crucial given the ephemeral nature of our nodes. It minimizes downtime and eliminates the need for manual interventions for Vault unsealing.

This article does not aim to describe all the steps, which are available in the GitHub repo documentation. Here is an example of Opentofu variables:

1name = "ogenki-vault"

2leader_tls_servername = "vault.priv.cloud.ogenki.io"

3domain_name = "priv.cloud.ogenki.io"

4env = "dev"

5mode = "ha"

6region = "eu-west-3"

7enable_ssm = true

8

9# Use hardened AMI

10ami_owner = "xxx" # Compte AWS où se trouve l'AMI

11ami_filter = {

12 "name" = ["*hardened-ubuntu-*"]

13}

After completing all the steps, Vault can be accessed, and we end up with a cluster consisting of 5 nodes.

🛠️ Configuration

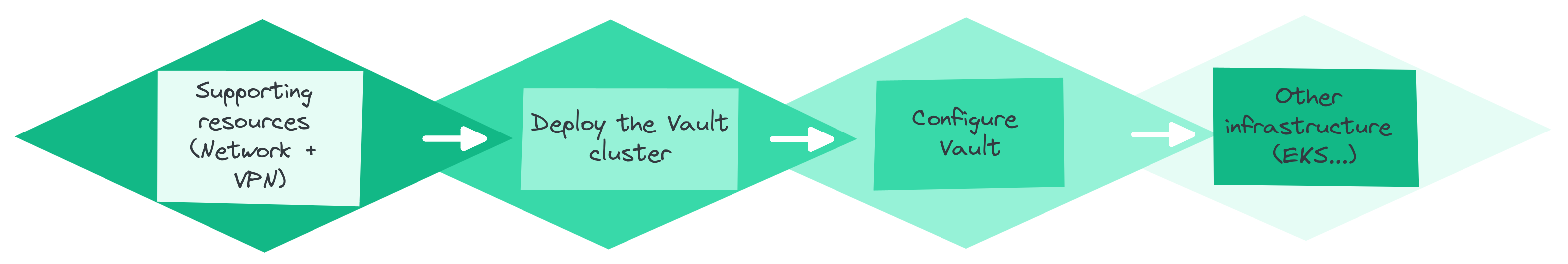

Deploying a complete platform is carried out sequentially, in distinct steps, because some operations must be done manually to ensure optimal security: The generation of the root certificate, which must be kept offline, and the initialization of Vault with the initial root token.

Obviously, supporting resources such as network components are required to deploy machines, then the Vault cluster can be installed and configured before considering the addition of other infrastructure elements, which will likely depend on the sensitive information stored in Vault.

The Vault configuration is applied using the Terraform provider, which authenticates using a token generated from the Vault instance. The proposal here demonstrates how to configure the PKI and allow internal applications to access to Vault's API, particularly on how to configure Cert-Manager.

Here are the organization's specific variables:

1domain_name = "priv.cloud.ogenki.io"

2pki_common_name = "Ogenki Vault Issuer"

3pki_country = "France"

4pki_organization = "Ogenki"

5pki_domains = [

6 "cluster.local",

7 "priv.cloud.ogenki.io"

8]

After following the procedure, the PKI is configured, and we can generate certificates.

Unlike public PKIs, where certificates are automatically trusted by client software, in a private PKI, the certificates need to be manually approved and installed on users devices.

💾 Scheduled Backups

Like any solution holding data, it is crucial to back it up. Especially for Vault which contains sensitive data: Regular backups to a secure location are therefore necessary. The solution proposed here is simply a Cronjob. It uses Crossplane to provision AWS resources and is broken down as follows:

- An

S3 bucketfor storing the snapshots - A

lifecycle policyto keep only the last 30 backups. - The bucket is encrypted with a specific

KMS key. - An

external-secretto retrieve the authentication parameters of theApprolespecific to the Cronjob. - A

Cronjobthat executes the script available in the repo and performs a snapshot as described in Hashicorp's documentation. - An

IRSA rolethat grants the pod permissions to write the snapshots to S3.

🚀 TLS with Gateway API

The aim of this post is to demonstrate practical usage with Gateway-API and, depending on the protocol used, several options are available for securing connections with TLS. For instance, we can use Passthrough to set TLS termination at the upstream (directly exposed by the pod).However, for our use case, we will use the most common scenario: HTTPS at the Gateway level.

Basically we only need to specify a Kubernetes secret that stores the certificate.

1listeners:

2- protocol: HTTPS

3 port: 443

4 tls:

5 mode: Terminate

6 certificateRefs:

7 - name: foobar-tls

Let's look into this in detail, as there are a few preliminary steps in order to obtain these secrets 🔍.

☁️ A Public Certificate

| Cert-Manager is an open-source tool for managing TLS certificates in Kubernetes.It is basically a Kubernetes operator that is controlled through the use of CRDs (Custom Resource Definitions): it is indeed possible to generate certificates by creating resources of type certificate. Cert-manager then takes care of ensuring they are always valid and initiates a renewal when necessary.It can be integrated with an increasing number of issuers such as Let's Encrypt, Venafi, Google, Vault, etc. |

In order to configure Cert-manager with Let's Encrypt we'll create a ClusterIssuer.ℹ️ In the context of cert-manager, an Issuer is a Kubernetes resource that generates and manages certificates within a specific namespace, while a ClusterIssuer is a global resource that operates at the cluster level and can manage certificates across all namespaces.

security/base/cert-manager/le-clusterissuer-prod.yaml

1apiVersion: cert-manager.io/v1

2kind: ClusterIssuer

3metadata:

4 name: letsencrypt-prod

5spec:

6 acme:

7 email: mymail@domain.tld

8 server: https://acme-v02.api.letsencrypt.org/directory

9 privateKeySecretRef:

10 name: ogenki-issuer-account-key

11 solvers:

12 - selector:

13 dnsZones:

14 - "cloud.ogenki.io"

15 dns01:

16 route53:

17 region: eu-west-3

- We are using the production instance of Let's Encrypt here, which is subject to certain rules, and it is recommended to start your tests on the staging instance.

- The email address is used to receive notifications, such as the need for renewal.

- An

ogenki-issuer-account-keykey is generated and used to authenticate with the ACME server. - The mechanism that proves the legitimacy of a certificate request is done through DNS resolution.

Now, how can we call this ClusterIssuer from a Gateway-API resource?

It turns out there is a very simple integration through the use of an annotation at the Gateway level. This solution is experimental and requires a specific parameter during the deployment of cert-manager.

security/base/cert-manager/helmrelease.yaml

1apiVersion: helm.toolkit.fluxcd.io/v2beta2

2kind: HelmRelease

3metadata:

4 name: cert-manager

5 namespace: security

6spec:

7 values:

8...

9 featureGates: ExperimentalGatewayAPISupport=true

It's also necessary to grant permissions to the Cert-manager controller to interact with Route53 to complete the DNS challenge. Here, I'm using a Crossplane Composition. (ℹ️ If you want to delve into Crossplane, it's over here.)

Then, the annotation and the target secret need to be specified in the Gateway manifest.

infrastructure/base/gapi/platform-public-gateway.yaml

1apiVersion: gateway.networking.k8s.io/v1beta1

2kind: Gateway

3metadata:

4 name: platform-public

5 annotations:

6 cert-manager.io/cluster-issuer: letsencrypt-prod

7spec:

8 gatewayClassName: cilium

9 listeners:

10 - name: http

11 hostname: "*.${domain_name}"

12...

13 tls:

14 mode: Terminate

15 certificateRefs:

16 - name: platform-public-tls

When the Gateway is created, a certificate is generated. This certificate uses the ClusterIssuer letsencrypt-prod mentioned above.

1kubectl describe certificate -n infrastructure platform-public-tls

2Name: platform-public-tls

3Namespace: infrastructure

4API Version: cert-manager.io/v1

5Kind: Certificate

6...

7Spec:

8 Dns Names:

9 *.cloud.ogenki.io

10 Issuer Ref:

11 Group: cert-manager.io

12 Kind: ClusterIssuer

13 Name: letsencrypt-prod

14 Secret Name: platform-public-tls

15 Usages:

16 digital signature

17 key encipherment

18Status:

19 Conditions:

20 Last Transition Time: 2024-01-24T20:43:26Z

21 Message: Certificate is up to date and has not expired

22 Observed Generation: 1

23 Reason: Ready

24 Status: True

25 Type: Ready

26 Not After: 2024-04-23T19:43:24Z

27 Not Before: 2024-01-24T19:43:25Z

28 Renewal Time: 2024-03-24T19:43:24Z

29 Revision: 1

Finally, after a few seconds, a Kubernetes secret is created containing the certificate. This is a _secret of type tls which contains these files: tls.crt, tls.key, and ca.crt.

The certificates generated by cert-manager are stored in Kubernetes secrets. Although it is possible to extract them using base64 and openssl commands, why not make life easier?

I am a command line guy and I regularly use the view-cert plugin, which displays a summary of tls type secrets.

1kubectl view-cert -n infrastructure platform-public-tls

2[

3 {

4 "SecretName": "platform-public-tls",

5 "Namespace": "infrastructure",

6 "Version": 3,

7 "SerialNumber": "35f659ad03e437805fbf48111b74738efe3",

8 "Issuer": "CN=R3,O=Let's Encrypt,C=US",

9 "Validity": {

10 "NotBefore": "2024-01-28T09:41:35Z",

11 "NotAfter": "2024-04-27T09:41:34Z"

12 },

13 "Subject": "CN=*.cloud.ogenki.io",

14 "IsCA": false

15 }

16]

It can be installed using krew

1kubectl krew install view-cert

🏠 A Private Certificate

Creating private certificates using Vault is pretty similar to the above method, with slights differences. We also need to define a ClusterIssuer:

security/base/cert-manager/vault-clusterissuer.yaml

1apiVersion: cert-manager.io/v1

2kind: ClusterIssuer

3metadata:

4 name: vault

5 namespace: security

6spec:

7 vault:

8 server: https://vault.priv.cloud.ogenki.io:8200

9 path: pki_private_issuer/sign/ogenki

10 caBundle: LS0tLS1CRUdJTiBDRVJUSUZJQ0...

11 auth:

12 appRole:

13 path: approle

14 roleId: f8363d0f-b7db-9b08-67ab-8425ab527587

15 secretRef:

16 name: cert-manager-vault-approle

17 key: secretId

- The URL specified is that of the Vault server. It must be accessible from the pods within Kubernetes.

- The

pathin Vault is part of the Vault configuration phase. It refers to the role authorized to generate certificates. - Here, we are using authentication via an Approle.

For more details on all the actions necessary for configuring Cert-Manager with Vault, refer to this procedure.

The main difference with the method used for Let's Encrypt lies in the fact that the certificate must be explicitly created. Indeed, the previous method allowed for automatic creation with an annotation.

infrastructure/base/gapi/platform-private-gateway-certificate.yaml

1apiVersion: cert-manager.io/v1

2kind: Certificate

3metadata:

4 name: private-gateway-certificate

5spec:

6 secretName: private-gateway-tls

7 duration: 2160h # 90d

8 renewBefore: 360h # 15d

9 commonName: private-gateway.priv.cloud.ogenki.io

10 dnsNames:

11 - gitops-${cluster_name}.priv.${domain_name}

12 - grafana-${cluster_name}.priv.${domain_name}

13 - harbor.priv.${domain_name}

14 issuerRef:

15 name: vault

16 kind: ClusterIssuer

17 group: cert-manager.io

As we can see, this certificate is used to serve certificates for weave-gitops, grafana, and harbor applications. It has a validity period of 90 days and will be automatically renewed 15 days before its expiration.

A few seconds after the certificate creation, a Kubernetes secret is generated.

1kubectl describe certificates -n infrastructure private-gateway-certificate

2Name: private-gateway-certificate

3Namespace: infrastructure

4API Version: cert-manager.io/v1

5Kind: Certificate

6...

7Spec:

8 Common Name: private-gateway.priv.cloud.ogenki.io

9 Dns Names:

10 gitops-mycluster-0.priv.cloud.ogenki.io

11 grafana-mycluster-0.priv.cloud.ogenki.io

12 harbor.priv.cloud.ogenki.io

13 Duration: 2160h0m0s

14 Issuer Ref:

15 Group: cert-manager.io

16 Kind: ClusterIssuer

17 Name: vault

18 Renew Before: 360h0m0s

19 Secret Name: private-gateway-tls

20Status:

21 Conditions:

22 Last Transition Time: 2024-01-27T19:54:57Z

23 Message: Certificate is up to date and has not expired

24 Observed Generation: 1

25 Reason: Ready

26 Status: True

27 Type: Ready

28 Not After: 2024-04-26T19:54:57Z

29 Not Before: 2024-01-27T19:54:27Z

30 Renewal Time: 2024-04-11T19:54:57Z

31 Revision: 1

32Events:

33 Type Reason Age From Message

34 ---- ------ ---- ---- -------

35 Normal Issuing 41m cert-manager-certificates-trigger Issuing certificate as Secret does not exist

36 Normal Generated 41m cert-manager-certificates-key-manager Stored new private key in temporary Secret resource "private-gateway-certificate-jggkv"

37 Normal Requested 41m cert-manager-certificates-request-manager Created new CertificateRequest resource "private-gateway-certificate-1"

38 Normal Issuing 38m cert-manager-certificates-issuing The certificate has been successfully issued

Finally, we just have to use this secret in the declaration of the private Gateway.

infrastructure/base/gapi/platform-private-gateway.yaml

1apiVersion: gateway.networking.k8s.io/v1beta1

2kind: Gateway

3metadata:

4 name: platform-private

5spec:

6 gatewayClassName: cilium

7 listeners:

8 - name: http

9 hostname: "*.priv.${domain_name}"

10...

11 tls:

12 mode: Terminate

13 certificateRefs:

14 - name: private-gateway-tls

We can verify the certification authority using the curl command:

1curl --verbose -k https://gitops-mycluster-0.priv.cloud.ogenki.io 2>&1 | grep 'issuer:'

2* issuer: O=Ogenki; CN=Ogenki Vault Issuer

💭 Final Thoughts

❓ Who hasn't experienced an incident related to certificate renewal? ❓ How to achieve a security level that meets enterprise standards? ❓ How can we simplify operational tasks related to TLS certificates maintenance?

This article has explored a concrete answer to these questions. Thanks to the automation built with Cert-manager, operational tasks are minimized while enhancing the security level.

The setup process for Let's Encrypt and the Gateway API is incredibly straightforward! Furthermore, the level of security that Vault offers for internal communications is certainly worth taking into account. However, it's clear that implementing multiple components requires meticulous attention during the setup of this entire infrastructure to ensure optimal security is maintained.

It's important to recall some recommendations and best practices before considering a production deployment. To keep this article readable, some points have not been addressed, but it is crucial to include them in your strategy:

- Keep the root certificate offline. In other words, it's imperative to store it on a non-connected medium to protect it from any potential threats.

- The revocation of the root or intermediate CA wasn't discussed. As well as the provision of a revocation list (Certificate Revocation List).

- Access to the Vault API must be rigorously restricted to a private network. You should take a look at my article on Tailscale.

- Also note that I didn't talk about authentication at all, but it's essential to set up an identity provider from the start and enable multi-factor authentication (MFA) to enhance security. Moreover, it's advisable to revoke Vault's root token once adequate authentication and permissions are in place. If necessary, the token can be regenerated following the procedure available here.

- The default Opentofu AMI value for the AMI (AWS Instance Image) deploys an Ubuntu from Canonical. It's recommended to use one that has been hardened. I built mine using this project.

- To be able to initialize Vault, a command must be run on the instance, which justifies the use of SSM. However, it's advisable to disable it when the initialization phase is over (

enable_ssm: falsein the Opentofu variables). - Send audit logs to a SIEM to be able to detect suspicious behaviors.

- Alert before the certificates expire. For example, you can use this exporter Prometheus open-sourced by the folks at Enix 😉. This is an additional safety measure, knowing that the proposed architecture makes everything automated.

- Pay special attention to KMS keys: the one used to unseal Vault, but also the one that allows creating snapshots. They are vital for restoring your backups.

- "A backup that's not verified is worthless": Therefore, construct a workflow that will verify the consistency of data in Vault. This might be the subject of another article, stay tuned!

- Organize periodic disaster recovery (DR) exercises to ensure your ability to rebuild the entire system from scratch, making sure you have all the necessary documentation and tools.

🔖 References

Github Issues

- Cert-manager and Vault: "The CA full chain is not included into the ca.crt"

Blog posts

- Private vs Public PKI: Building an Effective Plan (Author: Nick Naziridis)

- PKI Best practices for 2023

- Build an Internal PKI with Vault (Author: Stéphane Este-Gracias)

Hashicorp Documentation

- Regarding the integrated Raft storage:

- Production hardening

- PKI