Applying GitOps Principles to Infrastructure: An overview of tf-controller

Overview

Weave Gitops is deprecated. Using the Headlamp plugin now for displaying Flux resources.

Terraform is probably the most used "Infrastructure As Code" tool for building, modifying, and versioning Cloud infrastructure changes. It is an Open Source project developed by Hashicorp that uses the HCL language to declare the desired state of Cloud resources. The state of the created resources is stored in a file called opentofu state.

Terraform can be considered a "semi-declarative" tool as there is no built-in automatic reconciliation feature. There are several solutions to address this issue, but generally speaking, a modification will be applied using terraform apply. The code is actually written using the HCL configuration files (declarative), but the execution is done imperatively.

As a result, there can be a drift between the declared and actual state (for example, a colleague who would have changed something directly into the console 😉).

❓❓ So, how can I ensure that what is committed using Git is really applied. How to be notified if there is a change compared to the desired state and how to automatically apply what is in my code (GitOps)?

This is the promise of tf-controller, an Open Source Kubernetes operator from Weaveworks, tightly related to Flux (a GitOps engine from the same company). Flux is one of the solutions I really appreciate, that's why I invite you to have a look on my previous article

All the steps described in this article come from this Git repo

🎯 Our target

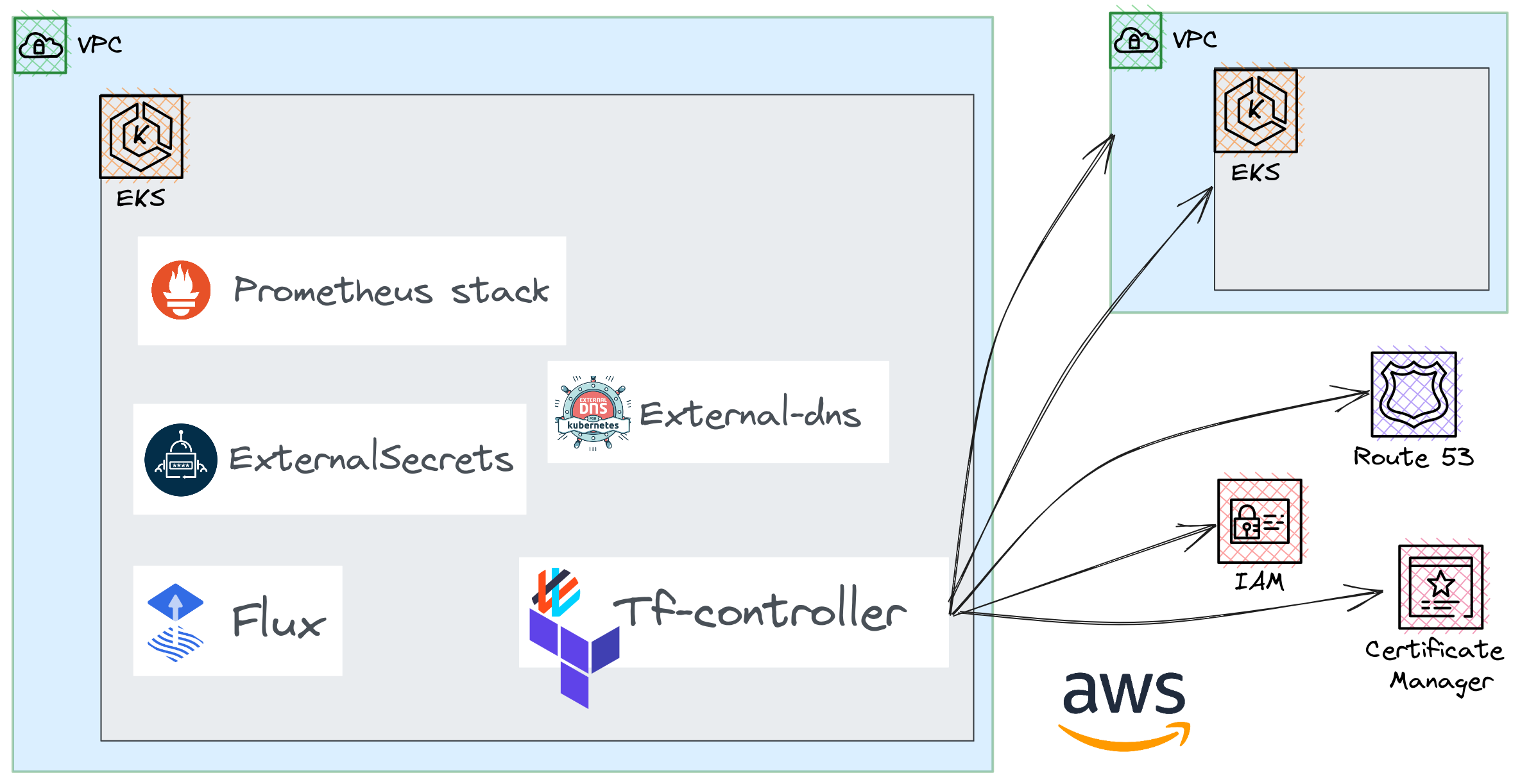

By following the steps in this article, we aim to achieve the following things:

- Deploy a Control plane EKS cluster. Long story short, it will host the Terraform controller that will be in charge of managing all the desired infrastructure components.

- Use Flux as the GitOps engine for all Kubernetes resources.

Regarding the Terraform controller, we will see:

- How to define dependencies between modules

- Creation of several AWS resources: Route53 Zone, ACM Certificate, network, EKS cluster.

- The different reconciliation options (automatic, requiring confirmation)

- How to backup and restore a Terraform state.

🛠️ Install the tf-controller

☸ The control plane

In order to be able to use the Kubernetes controller tf-controller, we first need a Kubernetes cluster 😆.

So we are going to create a control plane cluster using the terraform command line and EKS best practices.

It is crucial that this cluster is resilient, secure, and supervised as it will be responsible for managing all the AWS resources created subsequently.

Without going into detail, the control plane cluster was created using this code. That said, it is important to note that all application deployment operations are done using Flux.

By following the instructions in the README, an EKS cluster will be created but not only!Indeed, it is required to give permissions to the Terraform controller so it will able to apply infrastructure changes. Furthermore, Flux must be installed and configured to apply the configuration defined here.

We end up with several components installed and configured:

- The almost unavoidable addons:

aws-loadbalancer-controllerandexternal-dns - IRSA roles for these same components are installed using

tf-controller - Prometheus / Grafana monitoring stack.

external-secretsto be able to retrieve sensitive data from AWS secretsmanager.

To demonstrate all this after a few minutes the web interface for Flux is accessible via the URL gitops-<cluster_name>.<domain_name>

Still you should check that Flux is working properly

1aws eks update-kubeconfig --name controlplane-0 --alias controlplane-0

2Updated context controlplane-0 in /home/smana/.kube/config

1flux check

2...

3✔ all checks passed

4

5flux get kustomizations

6NAME REVISION SUSPENDED READY MESSAGE

7flux-config main@sha1:e2cdaced False True Applied revision: main@sha1:e2cdaced

8flux-system main@sha1:e2cdaced False True Applied revision: main@sha1:e2cdaced

9infrastructure main@sha1:e2cdaced False True Applied revision: main@sha1:e2cdaced

10security main@sha1:e2cdaced False True Applied revision: main@sha1:e2cdaced

11tf-controller main@sha1:e2cdaced False True Applied revision: main@sha1:e2cdaced

12...

📦 The Helm chart and Flux

Now that the control plane cluster is available we can add the Terraform controller and this is just the matter of using the Helm chart as follows.

We must declare the its Source first:

1apiVersion: source.toolkit.fluxcd.io/v1beta2

2kind: HelmRepository

3metadata:

4 name: tf-controller

5spec:

6 interval: 30m

7 url: https://weaveworks.github.io/tf-controller

Then we need to define the HelmRelease:

1apiVersion: helm.toolkit.fluxcd.io/v2beta1

2kind: HelmRelease

3metadata:

4 name: tf-controller

5spec:

6 releaseName: tf-controller

7 chart:

8 spec:

9 chart: tf-controller

10 sourceRef:

11 kind: HelmRepository

12 name: tf-controller

13 namespace: flux-system

14 version: "0.12.0"

15 interval: 10m0s

16 install:

17 remediation:

18 retries: 3

19 values:

20 resources:

21 limits:

22 memory: 1Gi

23 requests:

24 cpu: 200m

25 memory: 500Mi

26 runner:

27 serviceAccount:

28 annotations:

29 eks.amazonaws.com/role-arn: "arn:aws:iam::${aws_account_id}:role/tfcontroller_${cluster_name}"

When this change is actually written into Git, the HelmRelease will be deployed and the tf-controller started:

1kubectl get hr -n flux-system

2NAME AGE READY STATUS

3tf-controller 67m True Release reconciliation succeeded

4

5kubectl get po -n flux-system -l app.kubernetes.io/instance=tf-controller

6NAME READY STATUS RESTARTS AGE

7tf-controller-7ffdc69b54-c2brg 1/1 Running 0 2m6s

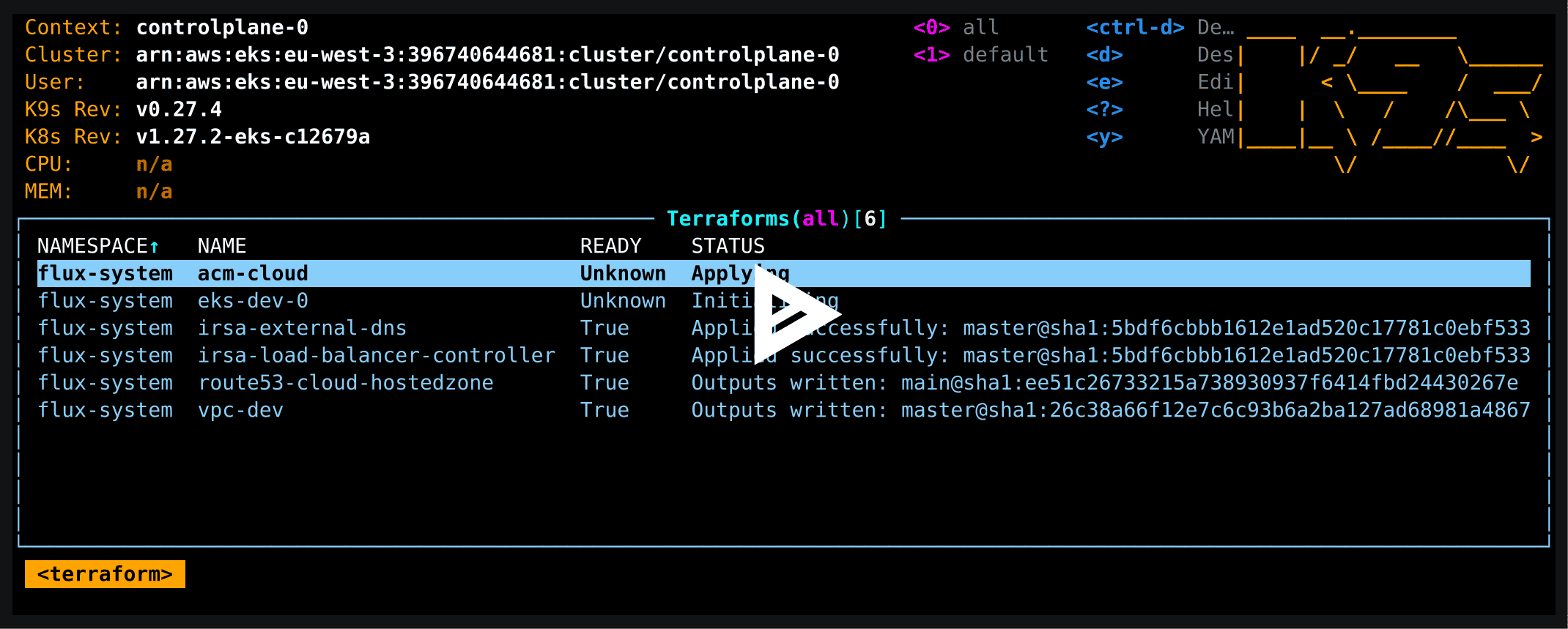

In this demo, there are already a several AWS resources declared. Therefore, after a few minutes, the cluster takes care of creating these:

Although the majority of operations are performed declaratively or via the CLIs kubectl and flux, another tool allows to manage Terraform resources: tfctl

🚀 Apply a change

One of the Terraform's best practices is to use modules.A module is a set of logically linked Terraform resources bundled into a single reusable unit. They allow to abstract complexity, take inputs, perform specific actions, and produce outputs.

You can create your own modules and make them available as Sources or use the many modules shared and maintained by communities.You just need to specify a few variables in order to fit to your context.

With tf-controller, the first step is therefore to define the Source of the module. Here we are going to configure the AWS base networking components (vpc, subnets...) using the terraform-aws-vpc module.

1apiVersion: source.toolkit.fluxcd.io/v1

2kind: GitRepository

3metadata:

4 name: terraform-aws-vpc

5 namespace: flux-system

6spec:

7 interval: 30s

8 ref:

9 tag: v5.0.0

10 url: https://github.com/terraform-aws-modules/terraform-aws-vpc

Then we can make use of this Source within a Terraform resource:

1apiVersion: infra.contrib.fluxcd.io/v1alpha2

2kind: Terraform

3metadata:

4 name: vpc-dev

5spec:

6 interval: 8m

7 path: .

8 destroyResourcesOnDeletion: true # You wouldn't do that on a prod env ;)

9 storeReadablePlan: human

10 sourceRef:

11 kind: GitRepository

12 name: terraform-aws-vpc

13 namespace: flux-system

14 vars:

15 - name: name

16 value: vpc-dev

17 - name: cidr

18 value: "10.42.0.0/16"

19 - name: azs

20 value:

21 - "eu-west-3a"

22 - "eu-west-3b"

23 - "eu-west-3c"

24 - name: private_subnets

25 value:

26 - "10.42.0.0/19"

27 - "10.42.32.0/19"

28 - "10.42.64.0/19"

29 - name: public_subnets

30 value:

31 - "10.42.96.0/24"

32 - "10.42.97.0/24"

33 - "10.42.98.0/24"

34 - name: enable_nat_gateway

35 value: true

36 - name: single_nat_gateway

37 value: true

38 - name: private_subnet_tags

39 value:

40 "kubernetes.io/role/elb": 1

41 "karpenter.sh/discovery": dev

42 - name: public_subnet_tags

43 value:

44 "kubernetes.io/role/elb": 1

45 writeOutputsToSecret:

46 name: vpc-dev

In summary: the terraform code from the terraform-aws-vpc source is applied using the variables defined within vars.

There are then several parameters that influence the tf-controller behavior. The main parameters that control how modifications are applied are .spec.approvePlan and .spec.autoApprove

🚨 Drift detection

Setting spec.approvePlan to disable only notifies that the current state of resources has drifted from the Terraform code.

This allows you to choose when and how to apply the changes.

This is worth noting that there is a missing section on notifications: Drift, pending plans, reconciliation problems. I'm trying to identify possible methods (preferably with Prometheus) and update this article as soon as possible.

🔧 Manual execution

The given example above (vpc-dev) does not contain the .spec.approvePlan parameter and therefore inherits the default value which is false.

In other words, the actual execution of changes (apply) is not done automatically.

A plan is executed and will be waiting for validation:

1tfctl get

2NAMESPACE NAME READY MESSAGE PLAN PENDING AGE

3...

4flux-system vpc-dev Unknown Plan generated: set approvePlan: "plan-v5.0.0-26c38a66f12e7c6c93b6a2ba127ad68981a48671" to approve this plan. true 2 minutes

I also advise to configure the storeReadablePlan parameter to human. This allows you to easily visualize the pending modifications using tfctl:

1tfctl show plan vpc-dev

2

3Terraform used the selected providers to generate the following execution

4plan. Resource actions are indicated with the following symbols:

5 + create

6

7Terraform will perform the following actions:

8

9 # aws_default_network_acl.this[0] will be created

10 + resource "aws_default_network_acl" "this" {

11 + arn = (known after apply)

12 + default_network_acl_id = (known after apply)

13 + id = (known after apply)

14 + owner_id = (known after apply)

15 + tags = {

16 + "Name" = "vpc-dev-default"

17 }

18 + tags_all = {

19 + "Name" = "vpc-dev-default"

20 }

21 + vpc_id = (known after apply)

22

23 + egress {

24 + action = "allow"

25 + from_port = 0

26 + ipv6_cidr_block = "::/0"

27 + protocol = "-1"

28 + rule_no = 101

29 + to_port = 0

30 }

31 + egress {

32...

33Plan generated: set approvePlan: "plan-v5.0.0@sha1:26c38a66f12e7c6c93b6a2ba127ad68981a48671" to approve this plan.

34To set the field, you can also run:

35

36 tfctl approve vpc-dev -f filename.yaml

After reviewing the above modifications, you just need to add the identifier of the plan to validate and push the change to git as follows:

1apiVersion: infra.contrib.fluxcd.io/v1alpha2

2kind: Terraform

3metadata:

4 name: vpc-dev

5spec:

6...

7 approvePlan: plan-v5.0.0-26c38a66f1

8...

After a few seconds, a runner will be launched and will apply the changes:

1kubectl logs -f -n flux-system vpc-dev-tf-runner

22023/07/01 15:33:36 Starting the runner... version sha

3...

4aws_vpc.this[0]: Creating...

5aws_vpc.this[0]: Still creating... [10s elapsed]

6...

7aws_route_table_association.private[1]: Creation complete after 0s [id=rtbassoc-01b7347a7e9960a13]

8aws_nat_gateway.this[0]: Still creating... [10s elapsed]

As soon as the apply is finished the status of the Terraform resource becomes "READY"

1kubectl get tf -n flux-system vpc-dev

2NAME READY STATUS AGE

3vpc-dev True Outputs written: v5.0.0@sha1:26c38a66f12e7c6c93b6a2ba127ad68981a48671 17m

🤖 Automatic reconciliation

We can also enable automatic reconciliation. To do this, set the .spec.autoApprove parameter to true.

All IRSA resources are configured in this way:

1piVersion: infra.contrib.fluxcd.io/v1alpha2

2kind: Terraform

3metadata:

4 name: irsa-external-secrets

5spec:

6 approvePlan: auto

7 destroyResourcesOnDeletion: true

8 interval: 8m

9 path: ./modules/iam-role-for-service-accounts-eks

10 sourceRef:

11 kind: GitRepository

12 name: terraform-aws-iam

13 namespace: flux-system

14 vars:

15 - name: role_name

16 value: ${cluster_name}-external-secrets

17 - name: attach_external_secrets_policy

18 value: true

19 - name: oidc_providers

20 value:

21 main:

22 provider_arn: ${oidc_provider_arn}

23 namespace_service_accounts: ["security:external-secrets"]

So if I make any change on the AWS console for example, it will be quickly overwritten by the one managed by tf-controller.

The deletion policy of components created by a Terraform resource is controlled by the setting destroyResourcesOnDeletion. By default anything created is not destroyed by the controller. If you want to destroy the resources when the Terraform object is deleted you must set this parameter to true.

Here we want to be able to delete IRSA roles because they're tightly linked to a given EKS cluster

🔄 Inputs/Outputs and modules dependencies

When using Terraform, we often need to share data from one module to another. This is done using the outputs that are defined within modules.So we need a way to store them somewhere and import them into another module.

Let's take again the given example above (vpc-dev). We can see at the bottom of the YAML file, the following block:

1...

2 writeOutputsToSecret:

3 name: vpc-dev

When this resource is applied, we will get a message confirming that the outputs are available ("Outputs written"):

1kubectl get tf -n flux-system vpc-dev

2NAME READY STATUS AGE

3vpc-dev True Outputs written: v5.0.0@sha1:26c38a66f12e7c6c93b6a2ba127ad68981a48671 17m

Indeed this module exports many information (126).

1kubectl get secrets -n flux-system vpc-dev

2NAME TYPE DATA AGE

3vpc-dev Opaque 126 15s

4

5kubectl get secret -n flux-system vpc-dev --template='{{.data.vpc_id}}' | base64 -d

6vpc-0c06a6d153b8cc4db

Some of these are then used to create a dev EKS cluster. Note that you don't have to read them all, you can cherry pick a few chosen outputs from the secret:

1...

2 varsFrom:

3 - kind: Secret

4 name: vpc-dev

5 varsKeys:

6 - vpc_id

7 - private_subnets

8...

💾 Backup and restore a tfstate

For my demos, I don't want to recreate the zone and the certificate each time the control plane is destroyed (The DNS propagation and certificate validation take time). Here is an example of the steps to take so that I can restore the state of these resources when I use this demo.

This is a manual procedure to demonstrate the behavior of tf-controller with respect to state files. By default, these tfstates are stored in secrets, but we would prefer to configure a GCS or S3 backend.

The initial creation of the demo environment allowed me to save the state files (tfstate) as follows.

1WORKSPACE="default"

2STACK="route53-cloud-hostedzone"

3BACKUPDIR="${HOME}/tf-controller-backup"

4

5mkdir -p ${BACKUPDIR}

6

7kubectl get secrets -n flux-system tfstate-${WORKSPACE}-${STACK} -o jsonpath='{.data.tfstate}' | \

8base64 -d > ${BACKUPDIR}/${WORKSPACE}-${STACK}.tfstate.gz

When the cluster is created again, tf-controller tries to create the zone because the state file is empty.

1tfctl get

2NAMESPACE NAME READY MESSAGE PLAN PENDING AGE

3...

4flux-system route53-cloud-hostedzone Unknown Plan generated: set approvePlan: "plan-main@sha1:345394fb4a82b9b258014332ddd556dde87f73ab" to approve this plan. true 16 minutes

5

6tfctl show plan route53-cloud-hostedzone

7

8Terraform used the selected providers to generate the following execution

9plan. Resource actions are indicated with the following symbols:

10 + create

11

12Terraform will perform the following actions:

13

14 # aws_route53_zone.this will be created

15 + resource "aws_route53_zone" "this" {

16 + arn = (known after apply)

17 + comment = "Experimentations for blog.ogenki.io"

18 + force_destroy = false

19 + id = (known after apply)

20 + name = "cloud.ogenki.io"

21 + name_servers = (known after apply)

22 + primary_name_server = (known after apply)

23 + tags = {

24 + "Name" = "cloud.ogenki.io"

25 }

26 + tags_all = {

27 + "Name" = "cloud.ogenki.io"

28 }

29 + zone_id = (known after apply)

30 }

31

32Plan: 1 to add, 0 to change, 0 to destroy.

33

34Changes to Outputs:

35 + domain_name = "cloud.ogenki.io"

36 + nameservers = (known after apply)

37 + zone_arn = (known after apply)

38 + zone_id = (known after apply)

39

40Plan generated: set approvePlan: "plan-main@345394fb4a82b9b258014332ddd556dde87f73ab" to approve this plan.

41To set the field, you can also run:

42

43 tfctl approve route53-cloud-hostedzone -f filename.yaml

So we need to restore the terraform state as it was when the cloud resources where initially created.

1cat <<EOF | kubectl apply -f -

2apiVersion: v1

3kind: Secret

4metadata:

5 name: tfstate-${WORKSPACE}-${STACK}

6 namespace: flux-system

7 annotations:

8 encoding: gzip

9type: Opaque

10data:

11 tfstate: $(cat ${BACKUPDIR}/${WORKSPACE}-${STACK}.tfstate.gz | base64 -w 0)

12EOF

You will also need to trigger a plan manually

1tfctl replan route53-cloud-hostedzone

2 Replan requested for flux-system/route53-cloud-hostedzone

3Error: timed out waiting for the condition

We can then check that the state file has been updated

1tfctl get

2NAMESPACE NAME READY MESSAGE PLAN PENDING AGE

3flux-system route53-cloud-hostedzone True Outputs written: main@sha1:d0934f979d832feb870a8741ec01a927e9ee6644 false 19 minutes

🔍 Focus on Key Features of Flux

Well, I lied a bit about the agenda 😝. Indeed I want to highlight two features that I hadn't explored until now and that are very useful!

Variable Substitution

When Flux is initialized, some cluster-specific Kustomization files are applied.

It is possible to specify variable substitutions within these files, so that they can be used in all resources deployed by this Kustomization. This helps to minimize code duplication.

I recently discovered the efficiency of this feature. Here is how I use it:

The Terraform code that creates an EKS cluster also generates a ConfigMap that contains cluster-specific variables such as the cluster name, as well as all the parameters that vary between clusters.

1resource "kubernetes_config_map" "flux_clusters_vars" {

2 metadata {

3 name = "eks-${var.cluster_name}-vars"

4 namespace = "flux-system"

5 }

6

7 data = {

8 cluster_name = var.cluster_name

9 oidc_provider_arn = module.eks.oidc_provider_arn

10 aws_account_id = data.aws_caller_identity.this.account_id

11 region = var.region

12 environment = var.env

13 vpc_id = module.vpc.vpc_id

14 }

15 depends_on = [flux_bootstrap_git.this]

16}

As mentioned previously, variable substitutions are defined in the Kustomization files. Let's take a concrete example.Below, we define the Kustomization that deploys all the resources controlled by the tf-controller.Here, we declare the eks-controlplane-0-vars ConfigMap that was generated during the EKS cluster creation.

1apiVersion: kustomize.toolkit.fluxcd.io/v1

2kind: Kustomization

3metadata:

4 name: tf-custom-resources

5 namespace: flux-system

6spec:

7 prune: true

8 interval: 4m0s

9 path: ./infrastructure/controlplane-0/opentofu/custom-resources

10 postBuild:

11 substitute:

12 domain_name: "cloud.ogenki.io"

13 substituteFrom:

14 - kind: ConfigMap

15 name: eks-controlplane-0-vars

16 - kind: Secret

17 name: eks-controlplane-0-vars

18 optional: true

19 sourceRef:

20 kind: GitRepository

21 name: flux-system

22 dependsOn:

23 - name: tf-controller

Finally, below is an example of a Kubernetes resource that makes use of it. This single manifest can be used by all clusters!.

1apiVersion: helm.toolkit.fluxcd.io/v2beta1

2kind: HelmRelease

3metadata:

4 name: external-dns

5spec:

6...

7 values:

8 global:

9 imageRegistry: public.ecr.aws

10 fullnameOverride: external-dns

11 aws:

12 region: ${region}

13 zoneType: "public"

14 batchChangeSize: 1000

15 domainFilters: ["${domain_name}"]

16 logFormat: json

17 txtOwnerId: "${cluster_name}"

18 serviceAccount:

19 annotations:

20 eks.amazonaws.com/role-arn: "arn:aws:iam::${aws_account_id}:role/${cluster_name}-external-dns"

This will reduce significantly the number of overlays that were used to patch with cluster-specific parameters.

Web UI (Weave GitOps)

In my previous article on Flux, I mentioned one of its downsides (when compared to its main competitor, ArgoCD): the lack of a Web interface. While I am a command line guy, this is sometimes useful to have a consolidated view and the ability to perform some operations with just a few clicks 🖱️

This is now possible with Weave GitOps! Of course, it is not comparable to ArgoCD's UI, but the essentials are there: pausing reconciliation, visualizing manifests, dependencies, events...

There is also the VSCode plugin as an alternative.

💭 Final thoughts

One might say "yet another infrastructure management tool from Kubernetes". Well this is true but despite a few minor issues faced along the way, which I shared on the project's Git repo, I really enjoyed the experience. tf-controller provides a concrete answer to a common question: how to manage our infrastructure like we manage our code?

I really like the GitOps approach applied to infrastructure, and I had actually written an article on Crossplane.

tf-controller tackles the problem from a different angle: using Terraform directly. This means that we can leverage our existing knowledge and code. There's no need to learn a new way of declaring our resources.This is an important criterion to consider because migrating to a new tool when you already have an existing infrastructure represents a significant effort. However, I would also add that tf-controller is only targeted at Flux users, which restricts its target audience.

Currently, I'm using a combination of Terraform, Terragrunt and RunAtlantis. tf-controller could become a serious alternative: We've talked about the value of Kustomize combined with variable substitions to avoid code deduplication. The project's roadmap also aims to display plans in pull-requests.

Another frequent need is to pass sensitive information to modules. Using a Terraform resource, we can inject variables from Kubernetes secrets. This makes it possible to use common secrets management tools, such as external-secrets, sealed-secrets ...

So, I encourage you to try tf-controller yourself, and perhaps even contribute to it 🙂

- The demo I used create quite a few resources, some of which are quite critical (like the network). So, keep in mind that this is just for the demo! I suggest taking a gradual approach if you plan to implement it: start by using drift detection, then create simple resources.

- I also took some shortcuts in terms of security that should be avoided, such as giving admin rights to the controller.